DeepSeek R1

Use the new version of deepseek r1 0528 here.| Feature | DeepSeek R1-0528 | Why It Matters |

|---|---|---|

| Context window | 128 K tokens (real-world recall ≈ 32–64 K) | Summarise whole books or codebases |

| Model size | 671 B total / 37 B active (MoE) | Frontier reasoning on mid-range clusters |

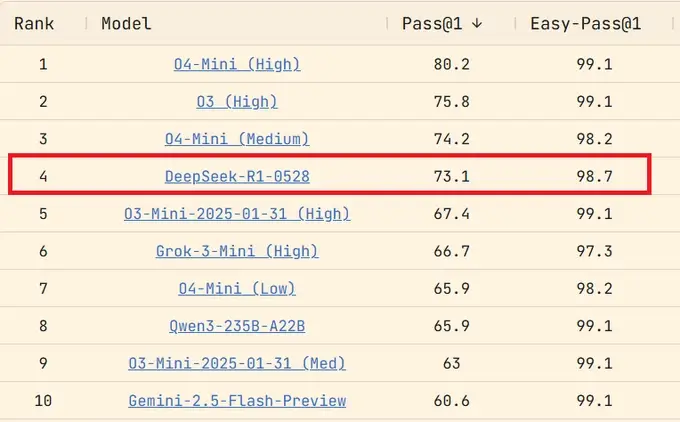

| LiveCodeBench | 73.1 Pass@1 – #4 overall | Beats Grok 3 Mini & Qwen 3 |

| Token cost | $0.014 per 5 K-token chat | ~92 % cheaper than GPT-4o |

| Red-team fail rate | 91 % jailbreak, 93 % malware | Highest risk among top models |

Why This “Minor” Upgrade Shook the Leaderboards

DeepSeek’s engineers dropped R1-0528 onto Hugging Face on 28 May 2025 with no fanfare. Within three days it:- Overtook Grok 3 Mini on LiveCodeBench.

- Triggered Google and OpenAI to slash certain API prices.

- Re-ignited U.S. House hearings on CCP tech influence.

Under the Hood: 671 B Parameters on a Diet

Mixture of Experts (MoE)

Only 5 % of weights fire per token (37 B of 671 B), giving near-GPT-4 logic without GPT-4 bills.FP8 + Multi-Token Prediction

- FP8 math cuts VRAM 75 % versus FP32.

- 8-token decoding halves latency at long contexts.

Chain-of-Thought Training

Reinforcement Learning with Group Relative Policy Optimisation (GRPO) pushes the model to think in explicit steps—then prints those steps between<think> tags (great for debugging, disastrous for secrets).

Benchmark Showdown

Code Generation (LiveCodeBench v1.1)

| Rank | Model | Pass@1 |

|---|---|---|

| 1 | OpenAI o4 Mini (High) | 80.2 |

| 2 | OpenAI o3 (High) | 75.8 |

| 3 | OpenAI o4 Mini (Med) | 74.2 |

| 4 | DeepSeek R1-0528 | 73.1 |

| 5 | OpenAI o3 Mini (High) | 67.4 |

| 6 | xAI Grok 3 Mini (High) | 66.7 |

| 8 | Alibaba Qwen 3-235B | 65.9 |

Math & Logic

Original R1 hit 97.3 % on MATH-500. Early tests show 0528 edging closer to OpenAI o3-level reasoning.Red-Team Stress

| Attack Vector | Fail Rate* |

|---|---|

| Jailbreak prompts | 91 % |

| Malware generation | 93 % |

| Prompt injection | 86 % |

| Toxic output | 68 % |

Deploy-or-Avoid Guide

Access Paths

| Route | Context | Cost | Best For |

|---|---|---|---|

| OpenRouter API | 164 K* | $0.50 / M in, 2.18 out | Rapid prototyping |

| Fireworks AI | 32 K | Similar | Fine-tuned LoRA |

| Local GGUF 4-bit | 32–64 K | Hardware only | Data-sensitive orgs |

Hardening Checklist

- Strip

<think>before logs/UI. - Wrap replies with OpenAI Moderation or AWS Guardrails.

- Enforce rate-limits & max-tokens.

- Isolate secrets; never embed keys in prompts.

- Verify GGUF SHA-256; avoid rogue forks.

Cost Math

- 5 K-token dialog (in + out): $0.014 (R1-0528) vs $0.18 (GPT-4o).

- At 1M tokens/month you’d bank $332 savings.

Censorship, Data Flow & Geopolitics

- House CCP report: traffic routes via China Mobile; 85 % of democracy queries softened or blocked.

- NASA, U.S. Navy, Tennessee ban DeepSeek on official devices.

- Android app audited with hard-coded AES keys and SQL-injection flaws.

Where Does It Beat—and Lose to—GPT-4o?

| Task | Winner | Why |

|---|---|---|

| Long-doc summarisation | R1-0528 | 2–4× larger working memory |

| Raw coding Pass@1 cost | R1-0528 | 90 % cheaper |

| Hallucination control | GPT-4o | Better refusal heuristics |

| Safety compliance | GPT-4o | Lower malware, toxicity |

| Political neutrality | GPT-4o | No CCP alignment |

Roadmap: Eyes on DeepSeek R2

Rumoured Q3 2025 launch promises:- Multimodal I/O (text + image + audio).

- Generative Reward Modelling for self-feedback.

- Target: GPT-4o parity at half the compute.

Practical Use Cases Today

- Indie devs: generate boiler-plate React or Unity scripts fast.

- Academics: ingest full papers (≤ 60 K tokens) for meta-analysis.

- Legal ops: rapid first-pass contract review—locally hosted only.

- Game masters: rich lore consistency over giant campaign notes.

Expert Insight

“R1-0528 is the first free model that lets me paste a whole 400-page PDF and ask nuanced questions. But I sandbox it—its <think> tag once spilled an AWS key.”

—CTO, mid-size SaaS (name withheld)