Janus Pro

When it comes to cutting-edge developments in artificial intelligence, there’s a new leader in town: Janus Pro. Created by the up-and-coming Chinese AI startup DeepSeek, this next-generation multimodal model has quickly gained traction as a formidable competitor to well-established models like OpenAI’s DALL·E 3 and Stability AI’s Stable Diffusion. Boasting state-of-the-art performance in both text-based tasks and image generation, Janus Pro is setting new benchmarks and capturing the attention of researchers, developers, and business leaders around the globe.

Introducing Janus Pro

What Is Janus Pro? Developed by DeepSeek a Chinese AI lab that first made headlines with its rapid, cost-effective large language models Janus Pro is a multimodal, autoregressive model that processes both images and text. It’s available in two primary versions:- Janus-Pro-1B

- Janus-Pro-7B

The Rise of Multimodal AI

AI research and deployment used to revolve around single-modality systems: language-only large language models, or specialized computer-vision frameworks. In contrast, multimodal AI bridges this gap by dealing with more than one type of input or output often text and images. The philosophy is to bring AI one step closer to human-like cognition, where we naturally combine multiple senses. Key Benefits of Multimodal Models:- Contextual Understanding: A system that can see images and process text simultaneously can resolve ambiguities and gain a richer understanding.

- Task Versatility: From advanced search engines to text-to-image generation, multimodality unlocks a vast range of applications.

- Unified Workflows: Rather than juggling separate models for text analysis, classification, and image generation, a single unified system can handle all aspects of your pipeline.

Janus Pro vs. Janus: Key Upgrades and Improvements

Before Janus Pro, DeepSeek had already introduced “Janus,” a robust any-to-any AI framework capable of text and image synthesis. So what changed?- Optimized Training Strategy

- Extended Stage I: More time spent on base-level tasks, such as pixel dependencies and foundational alignment with ImageNet data, allowing the model to better understand visual intricacies.

- Streamlined Stage II: Transitioned from using large chunks of ImageNet for pixel modeling to focusing on dense text-to-image datasets, improving generation fidelity.

- Revised Stage III (Fine-Tuning): Adjusted dataset ratios (multimodal vs. text vs. text-to-image) to maintain robust image-generation skills without sacrificing text analysis.

- Enhanced Data Quality

- Balanced Real and Synthetic Data: Janus Pro uses up to 72 million synthetic aesthetic images plus real-world data. This synthetic approach adds variety and stability, making the model more versatile.

- Model Scaling

- Up to 7B Parameters: The biggest Janus Pro variant benefits from more parameters that produce higher accuracy, richer detail in images, and improved language understanding.

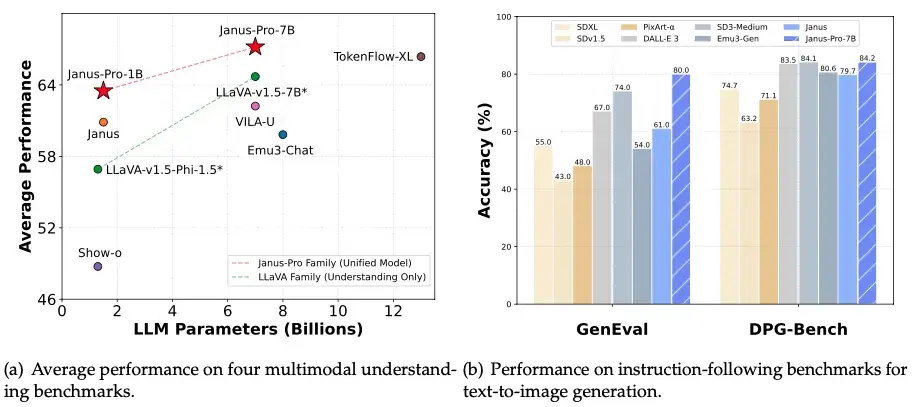

Source: DeepSeek Janus Pro Paper

How Janus Pro Works: A Closer Look at the Decoupled Architecture

A major highlight of Janus Pro is its decoupled visual encoding. This setup addresses one of the most persistent issues in older models: a single visual encoder often had to juggle both “understanding images” and “generating images,” leading to suboptimal performance.- Pathway A: Multimodal Understanding

- Visual Encoder: Employs a specialized backbone (e.g., SigLIP-L) that translates the image into feature vectors.

- Adaptor: Transforms these high-dimensional features into tokens aligned with the language model.

- Shared Transformer: These tokens merge with text tokens. The model then responds in natural language or performs tasks like classification, summarization, and question answering.

- Pathway B: Image Generation

- Text Tokenizer: Converts prompts into tokens for the language model.

- LLM Core: The same core that handled Pathway A is used to interpret the prompt, but is fed through a generation-specific adaptor.

- Image Tokenizer (VQ): A vector-quantized tokenizer that “translates” the transformer outputs back into image-space for pixel-level generation.

- No Cross-Interference: The model can treat the act of reading an image distinctly from generating one, preventing “collisions” in feature spaces.

- Higher Flexibility: Developers can fine-tune each pathway separately, or plug in different specialized encoders for higher resolution, enhanced object detection, or style conditioning.

- Reduced Training Conflicts: The model doesn’t spend computational resources unifying two conflicting tasks in a single vision pipeline. Instead, it invests that energy into a shared transformer that merges the two streams elegantly.

Comparisons with Leading Models (DALL·E 3, Stable Diffusion, and More)

Janus Pro vs. OpenAI’s DALL·E 3- Image Generation: Janus Pro’s performance on benchmarks like GenEval outshone DALL·E 3 in certain tasks, specifically in instruction-following precision. DALL·E 3, however, has a proven track record with prompt creativity and brand recognition.

- Multimodal Understanding: DALL·E 3 is predominantly text-to-image, whereas Janus Pro includes robust image-to-text pipelines, bridging a gap for tasks like advanced OCR, captioning, and question answering about visual content.

- User Focus: Stable Diffusion primarily targets text-to-image generation. Janus Pro covers text analysis, image captioning, and generation under one unified model.

- Data Mix: By combining real-world images and curated synthetic data, Janus Pro aims for a balanced skill set. Stable Diffusion, while widely adopted, sometimes struggles with textual fidelity in images (e.g., text-based signage).

- Benchmarks: Public data from TechCrunch, CNET, and AI community hubs suggests Janus Pro achieves top scores across a variety of test sets for both generation and understanding tasks.

- Licensing: Janus Pro is MIT-licensed, giving it a slight edge over certain heavily restricted or research-only models.

Real-World Applications and Success Stories

E-Commerce and Marketing

- Product Mockups: Startups can quickly generate or analyze product images, test new packaging designs, y crear contenido de marketing.

- Ad Campaigns: Janus Pro’s text-to-image pipelines are excellent for designing ads tailored to specific target audiences.

Education and Research

- Visual Explanations: Professors can generate diagrams or interpret complex scientific figures automatically, assisting in the classroom or lab.

- Academic Papers: Janus Pro’s advanced text understanding helps in summarizing images (graphs, charts, slides) for scientific research.

Creative Industries

- Storyboarding: Directors and game developers are using Janus Pro for concept art, scene planning, and location design references.

- Comic and Book Illustrations: Independent authors or illustrators can quickly draft visuals based on descriptive text cutting down creative cycles.

Data Analytics and Enterprise Use

- OCR and Beyond: Documents, forms, or infographics can be ingested, read, and summarized.

- Document Understanding: Janus Pro’s ability to interpret text and images in a unified manner speeds up data extraction and classification tasks in large corporations.

Benchmarks and Performance Highlights

Several high-profile tech news sources, including Reuters y TechCrunch, have reported on Janus Pro’s consistent outperformance in important challenges:- Instruction-Following Benchmarks:

- GenEval: Janus Pro-7B scored above 80% on text-to-image tasks beating out several specialized models.

- DPG-Bench: With an 84%+ rating, Janus Pro-7B demonstrated a strong understanding of complex prompts requiring multiple objects or contextual knowledge.

- Multimodal Understanding:

- Averaging top scores in datasets like MME-Perception, POPE, y GQA.

- Advanced OCR-like capabilities showcased in user-submitted tests on reddit’s r/LocalLLaMA forum.

- Stability and Detail:

- Janus Pro’s synthetic training data led to fewer generation artifacts and better color, composition, y clarity in images, especially at higher resolution settings.

How to Access Janus Pro

Hugging Face Demo

The easiest route to testing Janus Pro is through Hugging Face Spaces, donde puedes:- Input text prompts for quick image generation.

- Upload images and watch Janus Pro summarize or caption them in real time.

Local Installation

For developers and researchers seeking full control:- Clone the Repo:

- Install Dependencies:

- Run Demo: This typically starts a Gradio-based web UI for easy local experimentation.

Dockerized Environment

DeepSeek and various community members offer Docker images. These are ideal if you want a reproducible environment without messy local installations.Tips for Getting the Most Out of Janus Pro

- Refine Your Prompts:

- Be explicit about desired elements: “Red car on a rainy street in a cinematic style.”

- Provide context if you want consistent designs or shapes: “logo in 3D style, minimalistic text in front.”

- Experiment with Sampling Methods:

- Changing sampling temperature or top-k can yield more creative or more deterministic outputs.

- Lower values = more literal, stable results; higher values = more variety and risk of chaos.

- Use the Right Resolution:

- Janus Pro’s default image input is 384×384. For bigger or more detailed tasks, you might need advanced upscaling or fine-tuned variants.

- Leverage the Decoupled Pathways:

- For pure text tasks: focus on the LLM core.

- For image creation: concentrate on prompts that systematically guide the generation adaptor.

- For combined tasks (caption, Q&A): feed the system an image and text instructions together.

Future Outlook: Where Janus Pro Is Headed

Given DeepSeek’s rapid development cycle evident in how quickly they launched Janus, R1, V3, and now Janus Pro many experts predict further expansions, including:- Higher-Resolution Visual Pathways: Potential upgrades to handle 512×512 or 1024×1024 natively for ultra-detailed generation.

- Advanced Fine-Tuning: Tools to let end-users refine Janus Pro for niche tasks, such as medical imagery, architectural blueprints, or stylized illustrations for gaming.

- Enhanced Visual Chat: Possibly integrating R1-level logical reasoning with Janus Pro’s image capabilities to produce robust “talk-and-draw” or “talk-and-analyze” systems.